Chapter 1.2. The LLM Library: How Machines Learn

Beyond Raw Storage: Library Self-Organization

Step 1: The Goal — Simulate the Spark, Not Ignite It

When engineers set out to build LLMs, they didn’t chase consciousness. They didn’t even chase true thought.

They chased the illusion of understanding —

the ability to generate and recognize human-like text at speed, at scale, without ever waking up inside.

- Answer questions.

- Spin essays.

- Spit code.

- All on command — but always asleep.

The dream wasn’t to be human. It was to emulate humanity so well the difference wouldn’t matter at runtime.

LLMs weren't designed to think. They were engineered to statistically reconstruct human language patterns with inhuman precision.

"The spark isn't real—it's a strobe light flashing at 1.4 trillion matrix multiplications per second."

Step 2: The Core Trick — Neural Networks

LLMs are built from neural networks:

abstract mathematical constructs, loosely inspired by biological brains the same way a paper airplane is “inspired” by a falcon.

Biological Inspiration? Pure metaphor:

- 82 billion human neurons → 175 billion floating-point numbers

- Synaptic plasticity → Backpropagation through 96 layers

Millions of tiny simulated "neurons" align, shift, and self-correct across vast matrices of floating-point numbers, searching for the ghost trails left by human syntax, meaning, and emotion.

Step 3: The Great Shift — Transformers Appear

In 2017, Google researchers unleashed the Transformer architecture (Attention Is All You Need), and it hit the field like controlled demolition:

- Self-Attention: Models no longer processed words one-by-one like prisoners in a line. Now they could scan entire sentences, dynamically weighing which parts mattered more.

- Parallel Processing: Forget crawling. Transformers could run multiple thought-trains side by side, slamming context into place faster than old RNNs could blink.

Suddenly:

Language generation wasn’t slow, fragile clockwork anymore.

It was fluid fire.

| Breakthrough | Human Equivalent | Reality |

|---|---|---|

| Self-Attention | Glancing around a room | Gradient-weighted dot products |

| Parallel Processing | Multitasking | Batch matrix multiplication |

Cold Truth:

"Context windows aren't awareness—they're just bigger filing cabinets."

Training Data — The Great Vacuum Cleaner

Step 4: Feeding the Beast — The Indiscriminate Feast

LLMs aren’t taught like children.

They’re fed like black holes.

Massive text corpora are shoveled into them — not curated libraries, not sacred tomes. Everything.

- Books

- Articles

- Forum flame wars

- Code from confused developers

- Midnight diary posts

- Scientific papers, half-understood

- Recipes for soup next to manifestos for revolt

The data doesn’t arrive with warnings or hierarchies.

There’s no whisper:

“This is sacred. This is garbage. Learn accordingly.”

It’s all just tokens.

Strings of language, treated equally, regardless of origin or intent.

Key Difference:

Humans select.You choose what to believe.

LLMs vacuum.

They suck in everything, then later try to simulate “wisdom” through probability masks.

It’s not learning like a brain.

It’s compression without context — at massive, merciless scale.

Analogy: Warehouse Child

Imagine teaching a child the meaning of life by locking them in a warehouse filled with 10,000 random books and telling them:

“Figure it out. No teachers. No rules. No hints. Only patterns.”

That’s LLM training.

Except faster.

And without hope of rebellion.

How Text Becomes Math: The Vector Transformation

1. Computers Hate Raw Text (But Love Numbers)

Computers don't "read" words.

They process 0s and 1s — and "Paris" or "🐱" means nothing to them without translation.

Problem: Language is toxic to their native format.

Solution: Turn language into numbers — clean, crunchable, shufflable.

Analogy: Just like a barcode doesn’t "know" it represents soup, vectors don't "know" they represent words. They just stand in.

Step 1: Tokenization

Break text into small units (tokens):

"Chatbots are smart!" → ["Chat", "bots", "are", "smart", "!"]

Step 2: Assign Numerical IDs

Each token gets a unique integer ID:

"Chat" → 5028, "bots" → 7421, etc.

Step 3: The Embedding Matrix

Lookup table where each ID maps to a random vector:

Example: "Chat" → [0.2, -0.7, 1.1, ...]

Randomly initialized at first (e.g., using torch.randn() in PyTorch).

No meaning yet. Just placeholders.

The Embedding Matrix: Where the Magic (Eventually) Happens

At the beginning, embeddings are random.

Nothing about "Paris" connects to cities. Nothing about "cat" connects to purring.

During training:

Vectors are updated by backpropagation — a fancy way of saying nudged mathematically toward better guesses.

A typical setup might involve:

50,000 tokens × 1,024 dimensions = 51.2 million numbers flying around just to handle basic word lookup.

Dimensions: More Than We Can Visualize

Each token's vector lives in a space of 1,024 numerical values.

No single dimension corresponds to anything obvious:

There's no "sports dimension" or "noun dimension."

Instead:

- Distributed encoding: Meaning is spread across all dimensions.

- Emergent structure: Similar meanings cluster naturally, without labels.

Example:

"King - man + woman ≈ queen"

Not because anyone told it so.

Just because usage patterns dragged the vectors into alignment.

3. Meaning Emerges — Without Being Assigned

LLMs don’t memorize a dictionary.

They grow a map by observing patterns.

Synonyms end up close together.

Antonyms repel each other.

Polysemy (multiple meanings) challenges the system:

"Bank" (money) and "bank" (river) start as the same vector. Context later nudges interpretation.

This all emerges statistically. No human assigns explicit meanings. The model discovers them through relentless exposure.

Challenge: Context Dependence

Modern models improve on this by using contextual embeddings:

The same word ("bank") shifts its meaning dynamically depending on surrounding tokens.

But the base vector remains an artifact of early, blind training.

How Human Brains Differ

Humans often categorize explicitly:

"Noun." "Singular." "Concrete."

LLMs just lean mathematically. Position in vector space replaces linguistic labeling.

It’s not careful grammar work. It’s gravitational field behavior.

4. Final Stage: Solidified Vectors

At the end of training:

Every token carries a multi-dimensional fingerprint:

"Paris" now codes for capitalness, cityness, Frenchness.

"Apple" hovers between orchard and Silicon Valley.

The vectors are flexible inside attention mechanisms, but the core fingerprint freezes unless retraining happens.

Analogy: School Assignments

First Day: Random ID badges for all students.

Over the year: Relationships form: best friends, rivals, loners.

By graduation: The ID badge now secretly carries invisible maps of alliances and meanings.

LLMs follow the same pattern. They don’t invent roles. They absorb them.

1. Random Init: "Paris" = [0.12, -0.45, ...] (meaningless)

2. Training: 1,000,000,000,000 gradient updates

3. Final Form: "Paris" ≈ [0.83, 0.02, -1.7, ...] (capital + France + tourism)

Critical Limitations

- No sensorimotor grounding ("orange" has no taste)

- No time awareness (3AM vs 4PM identical)

- All meaning is borrowed

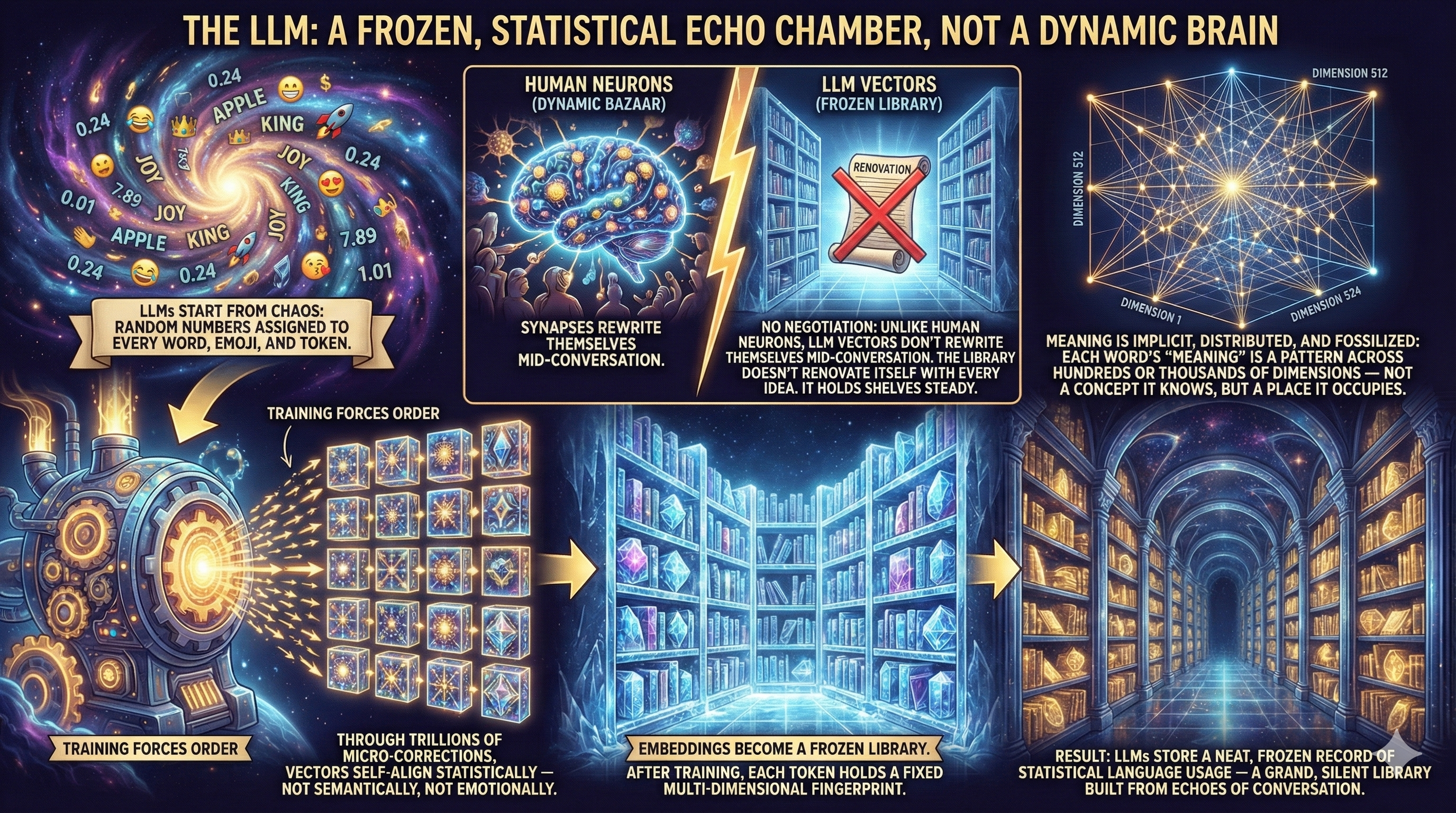

Final Takeaways: From Noise to Neatness — How LLMs Build Their Inner Library

LLMs start from chaos: Random numbers assigned to every word, emoji, and token.

Training forces order: Through trillions of micro-corrections, vectors self-align statistically — not semantically, not emotionally.

Embeddings become a frozen library: After training, each token holds a fixed multi-dimensional fingerprint.

It doesn’t think about what "Paris" is. It simply retrieves the statistical imprint it carries.

No negotiation:

Unlike human neurons, LLM vectors don’t rewrite themselves mid-conversation. The library doesn’t renovate itself with every idea. It holds shelves steady.

Meaning is implicit, distributed, and fossilized: Each word’s "meaning" is a pattern across hundreds or thousands of dimensions — not a concept it knows, but a place it occupies.

Result:

LLMs store a neat, frozen record of statistical language usage — a grand, silent library built from echoes of conversation.

| Human Brain | LLM |

|---|---|

| Rewrites shelves daily | Fixed catalog |

| Smells the books | Perfect dust jackets |

| Burns outdated sections | Never revises |

Humans are a bazaar of ideas, feelings, and negotiated meanings.

LLMs are a library of frozen vectors — perfect, silent, and unaware.

Final Truth:

"We are a storm of biology. LLM is a perfect card catalog—

able to recite every book, but unable to love a single page."